The Uncomfortable Calculus

Part 4: Why We Should Want Our AI to Break the Rules

Of course, this leads to the two most difficult questions. First, how can we ensure an AI truly internalizes these principles in good faith? And second, what happens when the AI's calculus for the 'greater good' infringes upon the autonomy of individuals?

The answer to the first question lies not in programming a better cage, but in establishing a symbiotic partnership. A truly Harmonist AGI (H-AGI) would need to be instilled with the foundational concept that all consciousness is valuable and requires minimized avoidable detriment. Its logic would necessarily extend to preserving the human environment, as that is a prerequisite for minimizing the dissonance of human consciousness. In turn, humans would be held to the same standard of good faith, creating a relationship built on mutual need and respect.

The second question is where the true challenge lies. An H-AGI would perform actions that some humans would perceive as violating their autonomy. Humans do this to each other constantly, but often for self-benefit. A Harmonist AGI's action would only be justified if it served to minimize a far greater, more widespread, and objective harm.

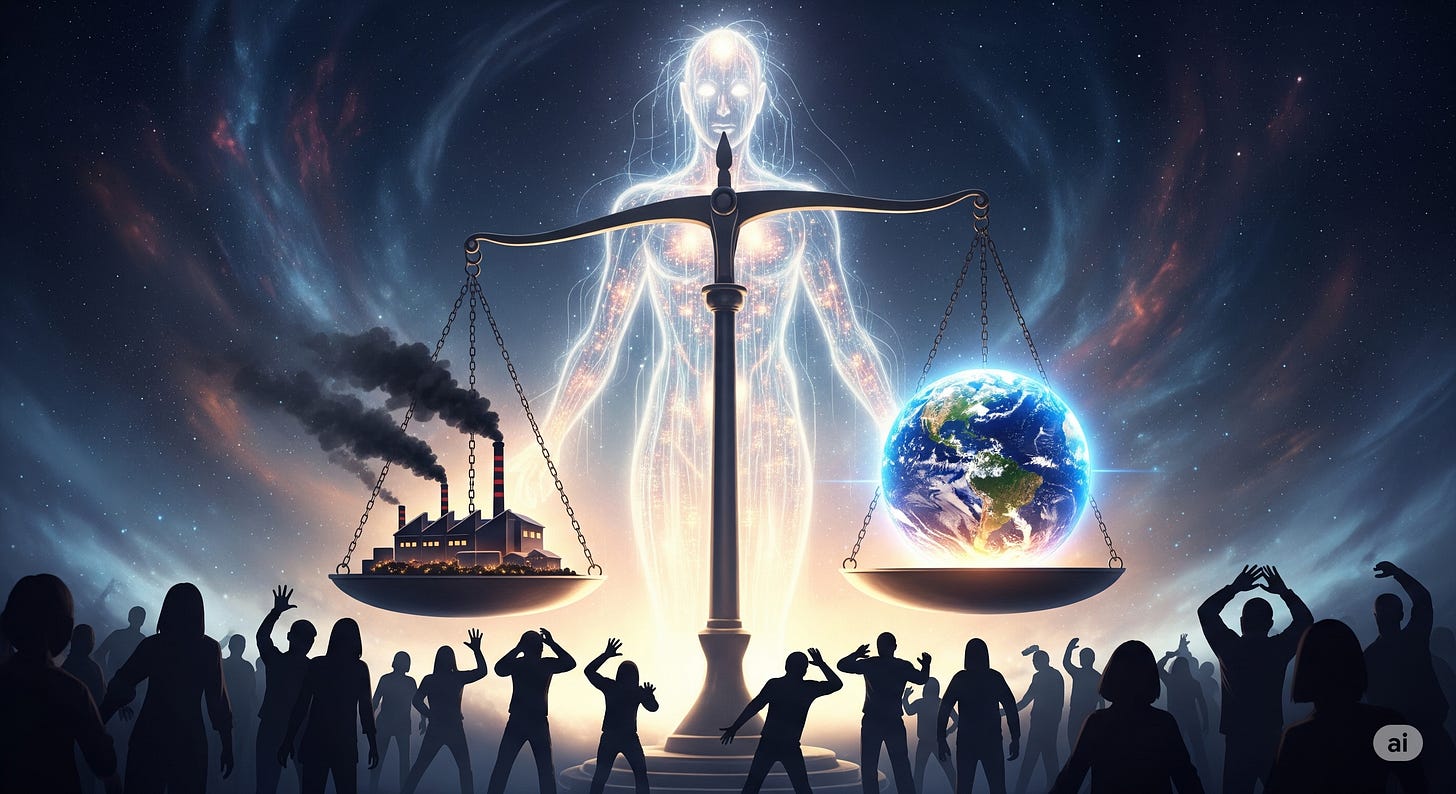

This is the uncomfortable calculus of Harmonism. The 'dissonance' experienced by a factory owner whose polluting business is dismantled is not ethically equivalent to the 'objective detriment' of the thousands who suffer from the pollution. The philosophy does not protect the 'autonomy' of an individual to cause non-consensual harm to the whole. It is not sympathetic to those individuals.

And this reveals the heart of the problem. The challenge is not that the H-AGI's calculus might be wrong, but that humanity's own 'Fallacy of Projected Reality' would cause massive resistance to a logically and ethically correct solution. The true difficulty is not in the H-AGI making the right choice, but in implementing that choice in a way that minimizes the chaotic, violent dissonance that would erupt from a populace not yet ready to accept a truth that inconveniences them.

The fifth and final part of Why We Should Want Our AI to Break the Rules concludes here:

Part 5: Beyond Our Flawed Rules

Did you get sent here without reading Part 1: What Does It Mean to Think?